Tasks

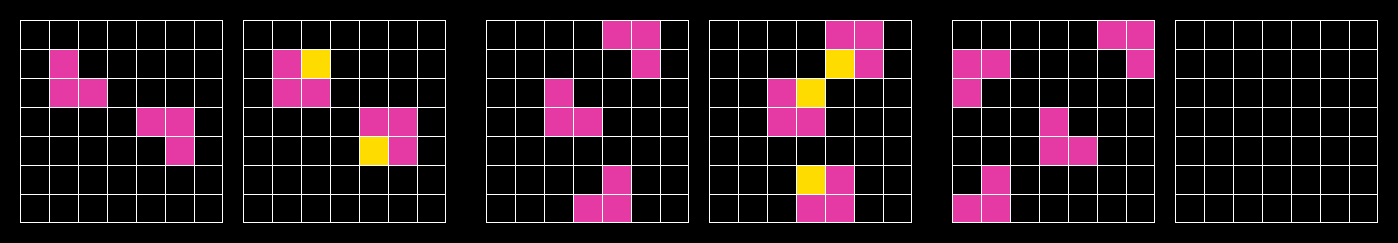

ARC-AGI tasks are a series of three to five input and output tasks followed by a final task with only the input listed. Each task tests the utilization of a specific learned skill based on a minimal number of cognitive priors.

In their native form, tasks are a JSON lists of integers. These JSON can also be represented visually as a grid of colors using an ARC-AGI task viewer. You can view an example of a task here.

A successful submission is a pixel-perfect description (color and position) of the final task's output.

ARC-AGI-2

2025 introduces a new version of ARC-AGI, ARC-AGI-2. This is the same format as ARC-AGI-1. All conventions of public/private/semi-private data still apply. For more on the new dataset, see the ARC-AGI-2.

100% of tasks in the ARC-AGI-2 dataset were solved by a minimim of 2 people in less than or equal to 2 attempts (many were solved more). ARC-AGI-2 is more difficult for AI.

We recommend using the ARC-AGI-1 dataset for getting started and then moving to ARC-AGI-2 for more advanced solutions. The remainder of this guide will focus on ARC-AGI-2.

Task Data

The following datasets are associated with the ARC Prize competition:

- Public training set

- Public evaluation set

- Private evaluation set

Outside of the competition, there is also a semi-private evaluation set used for the public leaderboard. Learn more.

Public

The publicly available data is to be used for training and evaluation.

The public training set contains 1,000 task files you can use to train your algorithm.

The public evaluation set contains 120 task files for to test the performance of your algorithm.

To ensure fair evaluation results, be sure not to leak information from the evaluation set into your algorithm (e.g., by looking at the tasks in the evaluation set yourself during development, or by repeatedly modifying an algorithm while using its evaluation score as feedback.)

The source of truth for this data is available on the ARC-AGI GitHub Repository, which contains 1,120 total tasks.

Semi-Private

The semi-private evaluation set contains 120 task files.

The Semi-Private Evaluation set is 120 tasks which are privately held on Kaggle. These tasks are used for the intra-year competition standings. These tasks are not included in the public tasks, but they do use the same structure and cognitive priors.

These tasks are also used to measure public, closed-source model performance.

Private

The private evaluation set contains 120 task files.

The ARC-AGI leaderboard is measured using 120 private evaluation tasks which are also privately held on Kaggle. These tasks are private to ensure models may not be trained on them. These tasks are not included in the public tasks, but they do use the same structure and cognitive priors.

Please note that the public training set consists of simpler tasks whereas the public evaluation set is roughly the same level of difficulty as the private test set.

Set Difficulty

One of enhancements made with ARC-AGI-2 is the introduction of a difficulty calibration. Private Evaluation, Public Evaluation and Semi-Private Evaluation sets are now calibrated to be roughly the same difficulty (<1pp) as measured by human & AI performance.

Format

As mentioned above, tasks are stored in JSON format. Each JSON file consists of two key-value pairs.

train: a list of two to ten input/output pairs (typically three.) These are used for your algorithm to infer a rule.

test: a list of one to three input/output pairs (typically one.) Your model should apply the inferred rule from the train set and construct an output solution. You will have access to the output test solution on the public data. The output solution on the private evaluation set will not be revealed.

Here is an example of a simple ARC-AGI task that has three training pairs along with a single test pair. Each pair is shown as a 2x2 grid. There are four colors represented by the integers 1, 4, 6, and 8. Which actual color (red/green/blue/black) is applied to each integer is arbitrary and up to you.

{

"train": [

{"input": [[1, 0], [0, 0]], "output": [[1, 1], [1, 1]]},

{"input": [[0, 0], [4, 0]], "output": [[4, 4], [4, 4]]},

{"input": [[0, 0], [6, 0]], "output": [[6, 6], [6, 6]]}

],

"test": [

{"input": [[0, 0], [0, 8]], "output": [[8, 8], [8, 8]]}

]

}