ARC-AGI-2

2025 Challenging static reasoning systems.

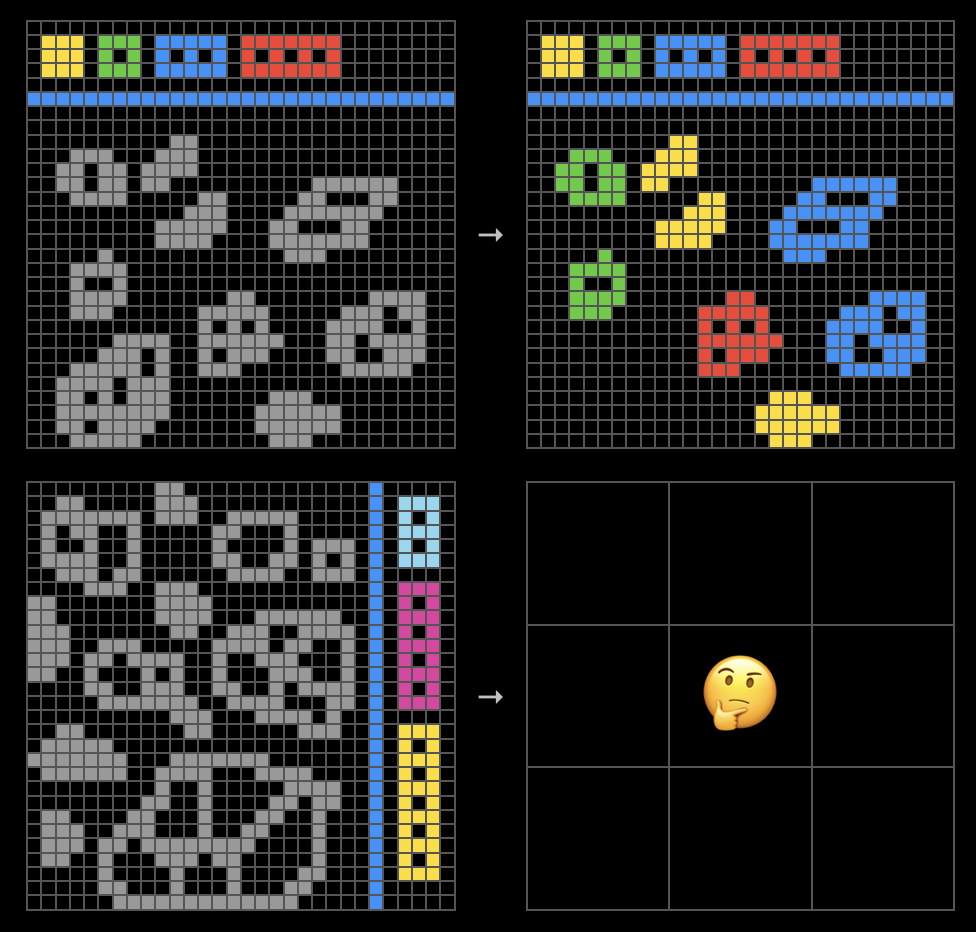

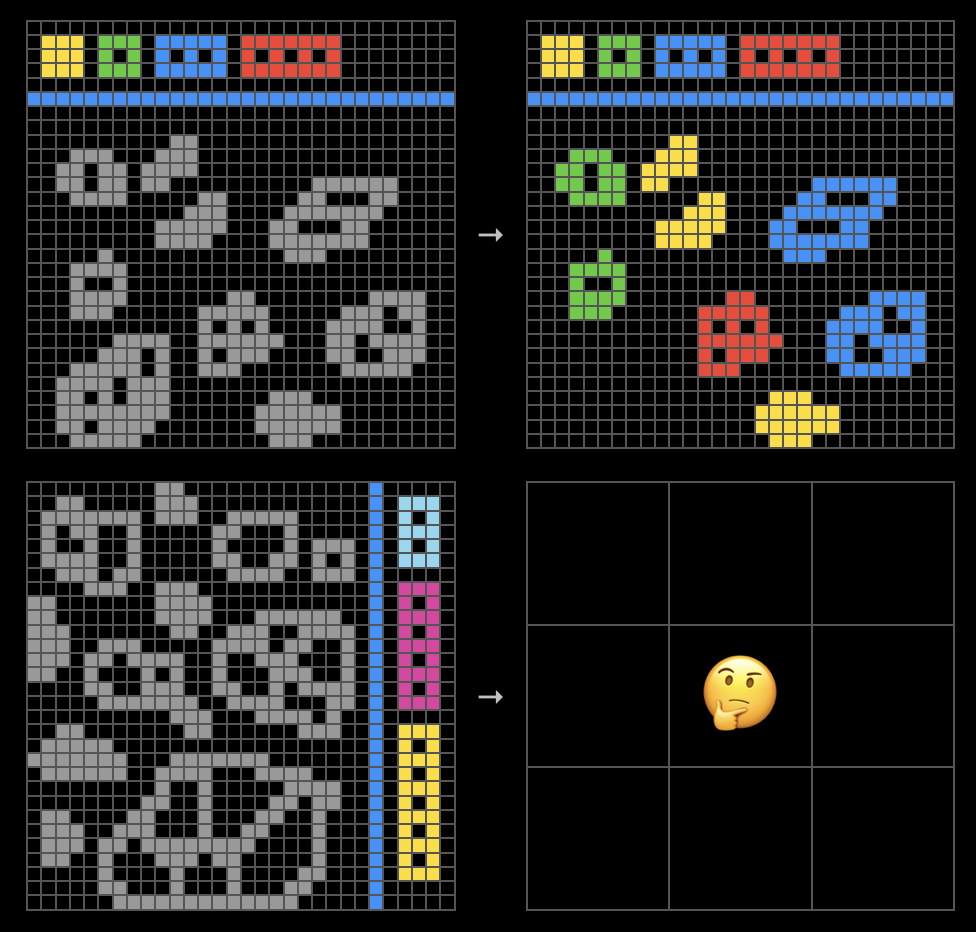

The same format as ARC-AGI-1 but more resistant to brute force - this benchmark version stress tests the efficiency and capability of state-of-the-art AI reasoning systems.

ARC Prize 2025 competition results are in! View the 2025 winners and new open source approaches.

Founded by Mike Knoop and François Chollet, the ARC Prize Foundation is a non-profit organization with the mission to guide researchers, industry, and regulators towards artificial general intelligence through enduring benchmarks.

The foundation is the steward of the ARC-AGI benchmark, which measures general intelligence through skill acquisition efficiency.

This year's competition has ended - thank you to the 1,454 teams who participated. More than $125,000 in prizes have been awarded across top papers and top scores.

- 1st Place Paper: Tiny Recursive Reasoning (Jolicoeur-Martineau)

- 1st Place Top Score: NVARC

See the breakthroughs, the winning teams, and the research pushing us closer to AGI.

2025 Challenging static reasoning systems.

The same format as ARC-AGI-1 but more resistant to brute force - this benchmark version stress tests the efficiency and capability of state-of-the-art AI reasoning systems.

Join a growing group of mission-aligned supporters who are steering the development of next-gen AI.

If you believe in actively shaping safe and open AGI, and gaining visibility, access, and influence in the world's top AI research community, donate today and help shape the future of AI.